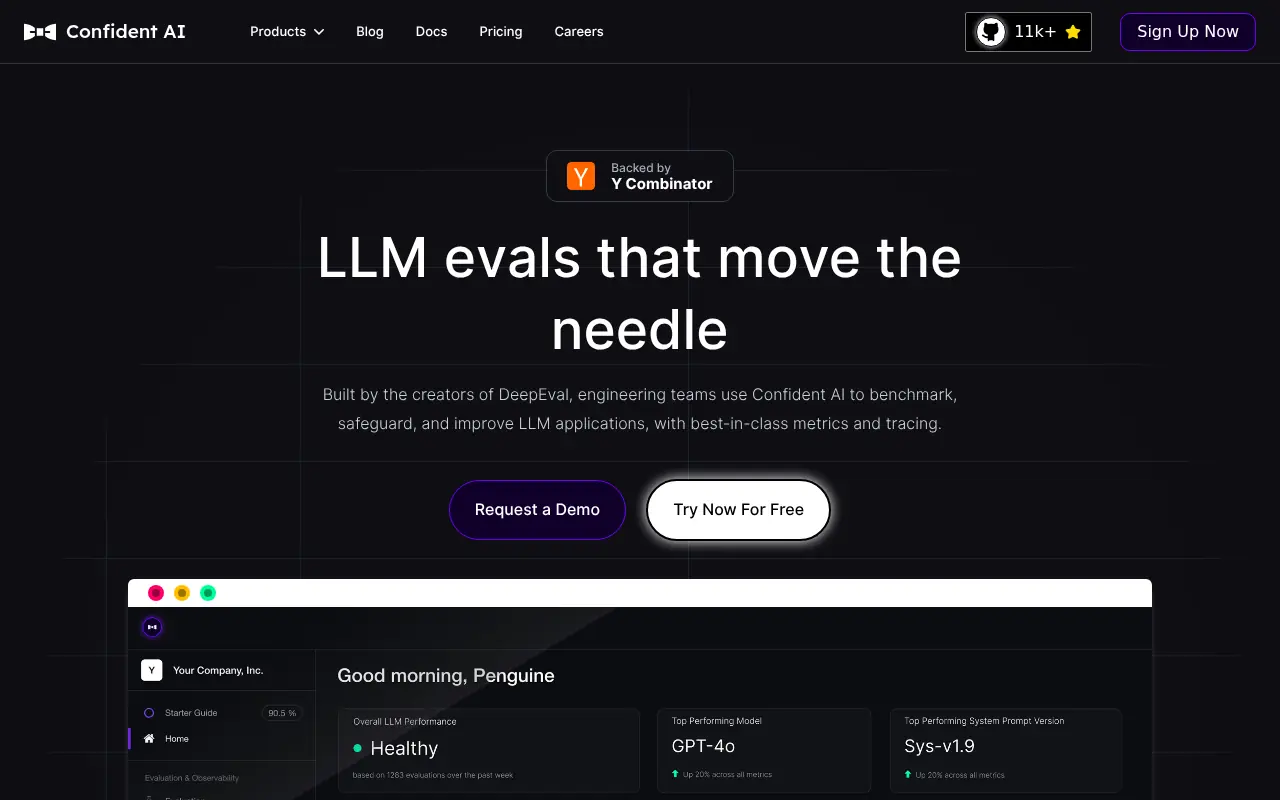

Confident AI

What is Confident AI?

Confident AI is an advanced platform designed to help organizations reliably test, benchmark, and quality-assure their LLM applications—from chatbots and RAG pipelines to agentic workflows and core models. Its mission is to automate and streamline the evaluation, regression testing, and continuous monitoring of LLMs, empowering technical teams to prevent regressions, optimize performance, and build stakeholder confidence in AI system outputs. The platform integrates the open-source DeepEval framework for flexible, scalable evaluations and provides a dashboard UI for real-time observability. Confident AI is tailored for enterprises, offering robust compliance options, flexible deployment, and comprehensive performance analytics to ensure consistent, high-quality AI deployments.

How to use Confident AI?

To use Confident AI, team members—engineers, QA, and product managers—integrate the DeepEval framework into their LLM application codebase, decorate the code with desired evaluation metrics, and run automated tests either on custom datasets or in real-time via tracing. The platform’s dashboard visualizes results, tracks performance over time, and highlights regressions, allowing users to compare versions, optimize prompts and models, and debug failures at the component level. Enterprise users can leverage CI/CD integration, data residency options, and advanced permissions for secure, compliant workflows.

Confident AI's Core Features

40+ LLM-as-a-judge metrics for comprehensive evaluation of model outputs.

Automated regression testing to catch breaking changes before production.

Real-time execution tracing and observability for ongoing AI performance monitoring.

Dataset curation, annotation, and generation tools for targeted testing.

CI/CD pipeline integration to automate LLM testing as part of continuous deployment.

Component-level tracing to identify and debug weaknesses in complex LLM pipelines.

Prompt management and versioning for collaborative workflow optimization.

HIPAA and SOC II compliance, with multi-region data residency for regulated industries.

Enterprise-grade access control, data masking, and permissions management.

Option to deploy on-premises or in your preferred cloud (AWS, Azure, GCP) with tailored support.

Intuitive product analytics dashboards for both technical and non-technical stakeholders.

Open-source integration via DeepEval, supporting a variety of frameworks and deployment environments.

Data-driven insights and comparison tools for iterative LLM optimization.

Confident AI's Use Cases

- #1

Automated regression testing of LLM-based chatbots before deployment

- #2

Benchmarking and A/B testing different prompts, models, or parameters for optimal performance

- #3

Continuous monitoring of LLM outputs in production to catch and diagnose issues early

- #4

Quality assurance for compliance-sensitive industries (e.g., healthcare, finance, insurance)

- #5

Collaborative prompt management and versioning across engineering and product teams

- #6

Real-time observability and analytics for multi-turn conversational AI applications

- #7

CI/CD integration for seamless, automated LLM testing in software delivery pipelines

Frequently Asked Questions

Analytics of Confident AI

Monthly Visits Trend

Traffic Sources

Top Regions

| Region | Traffic Share |

|---|---|

| United States | 16.64% |

| India | 10.44% |

| Germany | 3.81% |

| Korea, Republic of | 3.48% |

| Brazil | 3.30% |

Top Keywords

| Keyword | Traffic | CPC |

|---|---|---|

| confident ai | 2.2K | $6.80 |

| llm arena | 112.0K | $2.14 |

| llm as a judge | 6.3K | $4.43 |

| jailbreak prompt for glm | 30 | -- |

| deepeval | 9.9K | $4.67 |